Video creation is entering a new era—one where you don’t need a camera, actors, or even a script supervisor. Instead, you can type a few lines of text and watch a complete, realistic video unfold before your eyes. Welcome to the world of AI-generated video.

Tools like Sora (by OpenAI), Runway Gen-3, and others are transforming storytelling, marketing, journalism, and even education. But what can these systems really do—and where are their limits?

What Is AI-Generated Video?

AI video generation refers to tools that create moving images based on text prompts, images, or pre-recorded content. Unlike traditional CGI, these systems synthesize footage using generative models trained on vast datasets of real-world visuals, movement, and behavior.

Current leading tools include:

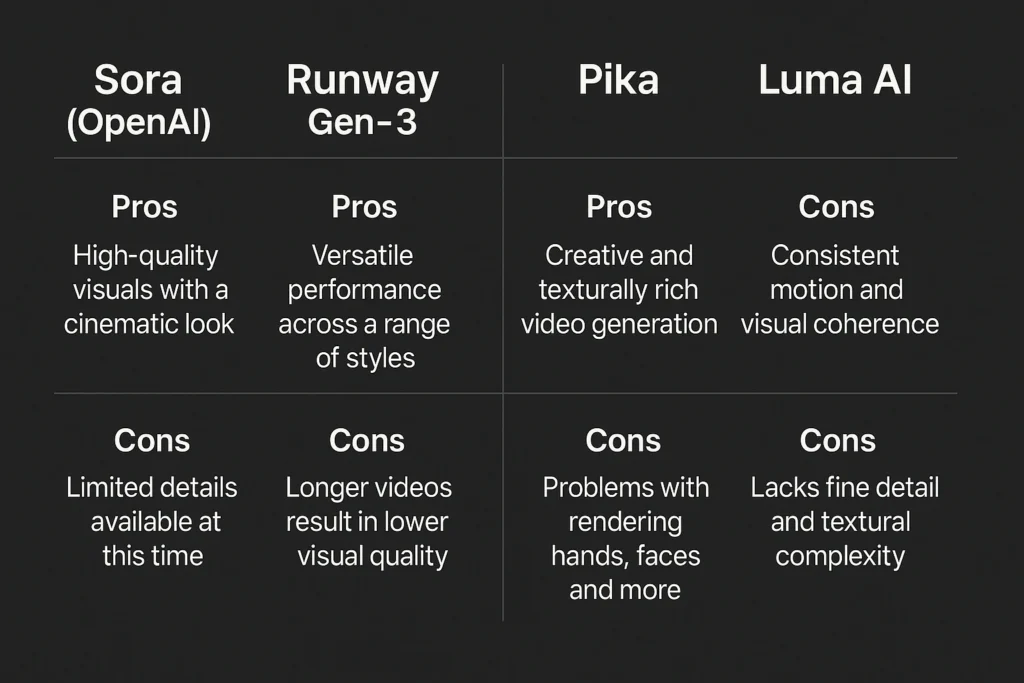

- Sora (OpenAI): Converts detailed text into photorealistic video clips up to 60 seconds long with complex camera movements, realistic lighting, and time progression.

- Runway Gen-3 Alpha: Focuses on artistic control, style conditioning, and high-speed rendering for creators. Great for surreal, cinematic effects.

- Pika & Luma AI: Allow text-to-video and video-to-video with customizable motion and camera guidance.

How It Works

These tools are powered by diffusion models and transformer-based architectures, similar to how ChatGPT generates text or DALL·E produces images. The AI learns not just to “paint” frames, but to simulate physics, lighting, depth, and motion continuity frame-by-frame.

Some tools even let you:

- Extend existing footage

- Animate still images

- Change backgrounds in live video

- Mimic camera moves like dolly zooms or drone flyovers

Use Cases in 2025

- Marketing & Product Ads: Brands use AI to generate dynamic, localized, or seasonal ad variations—without needing a shoot.

- Music Videos & Art Films: Indie creators generate entire dreamlike sequences with zero crew.

- Education: AI creates visual explainers, science animations, and historical reconstructions based on curriculum text.

- Previsualization: Film and game studios use it for scene prototyping—quick, cheap, flexible.

The Limits (for Now)

- Hands, faces, and motion accuracy still glitch—especially in complex prompts.

- Lip-sync and audio integration are not yet reliable.

- Prompt engineering matters: vague inputs lead to generic or inconsistent visuals.

- Ethical concerns: Deepfakes, misinformation, copyright confusion, and job disruption remain hot debates.

So… What’s Next?

AI-generated video is not replacing all video—yet. But it’s democratizing visual storytelling. In the same way that stock photos, smartphones, and Canva opened up graphic design, these tools are opening video to a new wave of creators.

It’s fast, it’s imperfect, it’s evolving—and it’s one of the most powerful creative technologies of this decade.